AI Workflow Automation for Enterprises | eZintegrations

November 7, 2025TL;DR (Key Takeaways)

AI Workflow Automation augments deterministic workflows with LLM steps to classify, extract, summarize, and normalize unstructured inputs (documents, emails, chats). Outputs are validated against schemas, routed by confidence thresholds, and logged for auditability—so downstream actions remain deterministic and reliable.

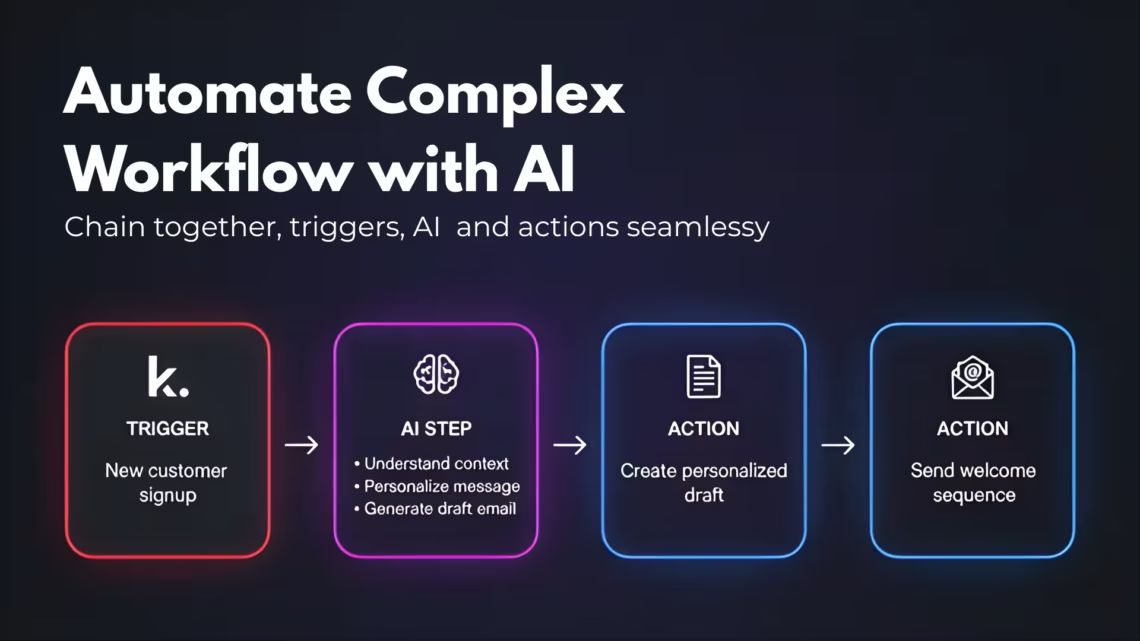

What is AI Workflow Automation?

Keep your traditional triggers/actions, but insert AI steps where human interpretation was needed: understanding intent, extracting fields, summarizing threads, or normalizing messy text into strict JSON the rest of the flow can trust AI Workflow Automation.

Where AI fits in a flow

- Pre-process (OCR, language detection)

- Infer (classify/extract/summarize)

- Validate (JSON Schema, enums, regex, business rules)

- Decide (confidence thresholds; human-in-the-loop if uncertain)

- Proceed (DB/API updates, notifications, downstream workflows)

High-value use cases

- Finance: Extract invoice/PO data → ERP; detect duplicates.

- Sales: Parse inbound emails → intent → create/update CRM objects.

- Support: Auto-triage tickets, draft replies for agent approval.

- Compliance: Detect and redact PII before storage/sharing.

Guardrails that matter

- Strict schemas (types, enums, ranges, required fields).

- Self-check prompts (“return valid JSON; if unsure, set confidence low”).

- Fallbacks to forms/manual review when confidence < threshold.

- Cost control with caching, batching, small-model defaults and escalation.

- Observability: log prompts/outputs, token usage, acceptance rate, correction rate.

Implementation blueprint

- Define target schema + examples (positive/negative).

- Add retrieval context (if needed) with hybrid search for better grounding.

- Validate programmatically; retry with guidance on failures.

- Start with human-in-the-loop; raise automation as quality improves.

- Track precision/recall, latency, and cost per item.

AI Workflow vs AI Agent

AI workflow Automation steps are bounded inside a deterministic pipeline. An AI Agent chooses the next step autonomously using planning and memory.

FAQs

1. Which model size should I use?

Start small, using smaller, faster models for most tasks. Escalate only for highly complex or ambiguous inputs, or to handle specific edge cases that simple models cannot resolve.

2. How do I protect sensitive data?

Protecting data involves using redaction techniques, ensuring data processing occurs in specified regional processing zones, selecting zero-retention vendors, and establishing a Data Processing Agreement (DPA) with vendors.

3. How does eZintegrations™ handle unstructured data?

eZintegrations™ inserts LLM inference steps into workflows that touch unstructured data (like emails, PDFs, chats, or images) to classify, extract, summarize, and normalize that data into strict JSON format.

4. What if the AI model provides an incorrect output?

To mitigate errors, the platform uses confidence scores for validation, implements human review processes, and leverages rule-based post-processing to automatically correct predictable mistakes.

5. Can AI outputs be audited?

Yes. eZintegrations™ provides built-in observability and auditability by storing the prompt, the output, all validation results, and any subsequent reviewer actions taken by humans.

6. How does eZintegrations™ control costs and ensure reliability?

The platform includes built-in observability and cost controls through features like caching, batching requests, and model routing. It also validates outputs using schemas and routes by confidence to ensure determinism and reliability.

Next Steps: