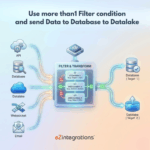

How to Route Data to a Database and Datalake Using Multiple Filters

$0.00

| Workflow Name: |

More Than 1 Filter Condition with Database and Datalake as Target |

|---|---|

| Purpose: |

Route filtered records to Database & Datalake |

| Benefit: |

Simultaneous data availability in DB & Datalake |

| Who Uses It: |

Data Teams; IT |

| System Type: |

Data Integration Workflow |

| On-Premise Supported: |

Yes |

| Industry: |

Analytics / Data Engineering |

| Outcome: |

Filtered records sent to Database & Datalake |

Table of Contents

Description

| Problem Before: |

Manual routing of filtered data |

|---|---|

| Solution Overview: |

Automated routing to Database and Datalake using multiple filters |

| Key Features: |

Filter; validate; route; schedule |

| Business Impact: |

Faster; accurate dual-target ingestion |

| Productivity Gain: |

Removes manual routing |

| Cost Savings: |

Reduces labor and errors |

| Security & Compliance: |

Secure connections |

More Than 1 Filter Condition with Database and Datalake as Target

The Database & Datalake Multi Filter Workflow routes records after applying multiple filter conditions, sending them simultaneously to both a database and a Datalake. This ensures relevant and high-quality data is available across platforms.

Advanced Filtering for Synchronized Data Storage

The system applies multiple predefined filters to incoming data, validates the results, and loads the refined records into the target database and Datalake in near real time. This workflow helps data and IT teams maintain consistent, structured datasets while reducing manual effort and improving data reliability.

Watch Demo

| Video Title: |

API to API integration using 2 filter operations |

|---|---|

| Duration: |

6:51 |

Outcome & Benefits

| Time Savings: |

Removes manual routing |

|---|---|

| Cost Reduction: |

Lower operational overhead |

| Accuracy: |

High via validation |

| Productivity: |

Faster dual-target ingestion |

Industry & Function

| Function: |

Data Routing |

|---|---|

| System Type: |

Data Integration Workflow |

| Industry: |

Analytics / Data Engineering |

Functional Details

| Use Case Type: |

Data Integration |

|---|---|

| Source Object: |

Multiple Source Records |

| Target Object: |

Database & Datalake |

| Scheduling: |

Real-time or batch |

| Primary Users: |

Data Engineers; Analysts |

| KPI Improved: |

Data availability; processing speed |

| AI/ML Step: |

Not required |

| Scalability Tier: |

Enterprise |

Technical Details

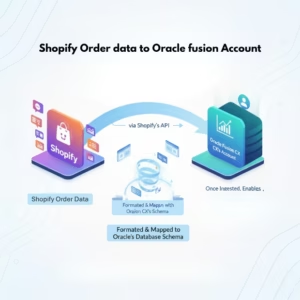

| Source Type: |

API / Database / Email |

|---|---|

| Source Name: |

Multiple Sources |

| API Endpoint URL: |

– |

| HTTP Method: |

– |

| Auth Type: |

– |

| Rate Limit: |

– |

| Pagination: |

– |

| Schema/Objects: |

Filtered records |

| Transformation Ops: |

Filter; validate; normalize |

| Error Handling: |

Log and retry failures |

| Orchestration Trigger: |

On upload or scheduled |

| Batch Size: |

Configurable |

| Parallelism: |

Multi-source concurrent |

| Target Type: |

Database & Datalake |

| Target Name: |

Database & Datalake |

| Target Method: |

Insert / Upload |

| Ack Handling: |

Logging |

| Throughput: |

High-volume records |

| Latency: |

Seconds/minutes |

| Logging/Monitoring: |

ingestion logs |

Connectivity & Deployment

| On-Premise Supported: |

Yes |

|---|---|

| Supported Protocols: |

API; DB; Email |

| Cloud Support: |

Hybrid |

| Security & Compliance: |

Secure connections |

FAQ

1. What is the 'More Than 1 Filter Condition with Database and Datalake as Target' workflow?

It is a data integration workflow that routes filtered records to both a database and a Datalake after applying multiple filter conditions, ensuring simultaneous data availability in both targets.

2. How do multiple filter conditions work in this workflow?

The workflow evaluates more than one predefined filter condition on the source data and routes only the records that satisfy all conditions to the database and Datalake.

3. What types of source systems are supported?

The workflow supports APIs, databases, and file-based sources, applying the filters consistently before routing data to the targets.

4. How frequently can the workflow run?

The workflow can run on a scheduled basis, near real-time, or on-demand depending on data processing and operational requirements.

5. What happens to records that do not meet the filter conditions?

Records that do not satisfy all filter conditions are excluded and are not routed to either the database or the Datalake.

6. Who typically uses this workflow?

Data teams and IT teams use this workflow to ensure filtered, high-quality data is available simultaneously in both the database and Datalake for analytics and operations.

7. Is on-premise deployment supported?

Yes, this workflow supports on-premise data sources as well as hybrid environments.

8. What are the key benefits of this workflow?

It ensures simultaneous data availability in both database and Datalake, improves data quality, reduces manual routing effort, and supports efficient analytics and operational workflows.

Resources

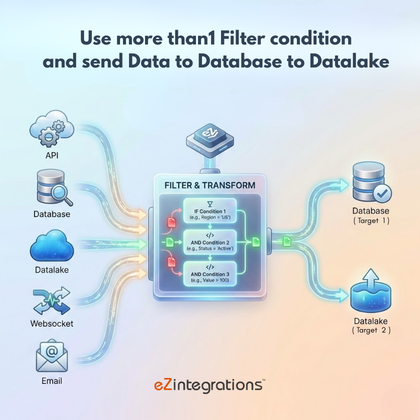

Case Study

| Customer Name: |

Data Team |

|---|---|

| Problem: |

Manual routing of filtered data |

| Solution: |

Automated dual-target ingestion |

| ROI: |

Faster workflows; reduced errors |

| Industry: |

Analytics / Data Engineering |

| Outcome: |

Filtered records sent to Database & Datalake |